(final project) Pose Prompter

This blog post is submission for final project for A&HA-4084 at Teachers College

This is a pose prompter machine. It displays a generated image that is prompted by a text prompt and a user’s pose in front of a webcam. It is a combination of real-time hand tracking with automated image generation. It is a novel way of prompting for AI image generation. The system operates in two components: ‘app_camera.py’ and ‘index-camera.html’ are focused on real-time hand tracking, while ‘app.py’ and ‘index.html’ send and receive data from the image generator. The flagship workflow is a moon above a beach horizon with aurora borealis.

I used several applications and platforms to make this project:

- GPT4: writing code and software walkthrough

- VS Code: writing code and file management

- Github: software and asking for advice

- MediaPipe: body tracking

- ComfyUI: image generating software (a version of Stable Diffusion)

*note: I refer to ComfyUI as ‘ComfyUI’ even when not referring to the user interface component of ComfyUI. It seems to be the convention in the community.

Code

All code for this project is available on GitHub at https://github.com/mkg2145/pose-prompter

The project is made up of two components, the camera component that captures a screenshot of the JavaScript overlay, and the image generation component that uses that screenshot as an input for the image generator (ComfyUI) and displays the output image on a webUI.

Camera component: ‘app_camera.py’ and ‘index-camera.html’

The first component of this project are two files: ‘app_camera.py’ and ‘index-camera.html’. Together they display the webcam, use MediaPipe for hand tracking, apply a JavaScript overlay of circles on the detected hand areas, and capture a screenshot every 200ms. The screenshots are saved to a directory for immediate use by ‘app.py’.

Image generation component: ‘app.py’ and ‘index.html’

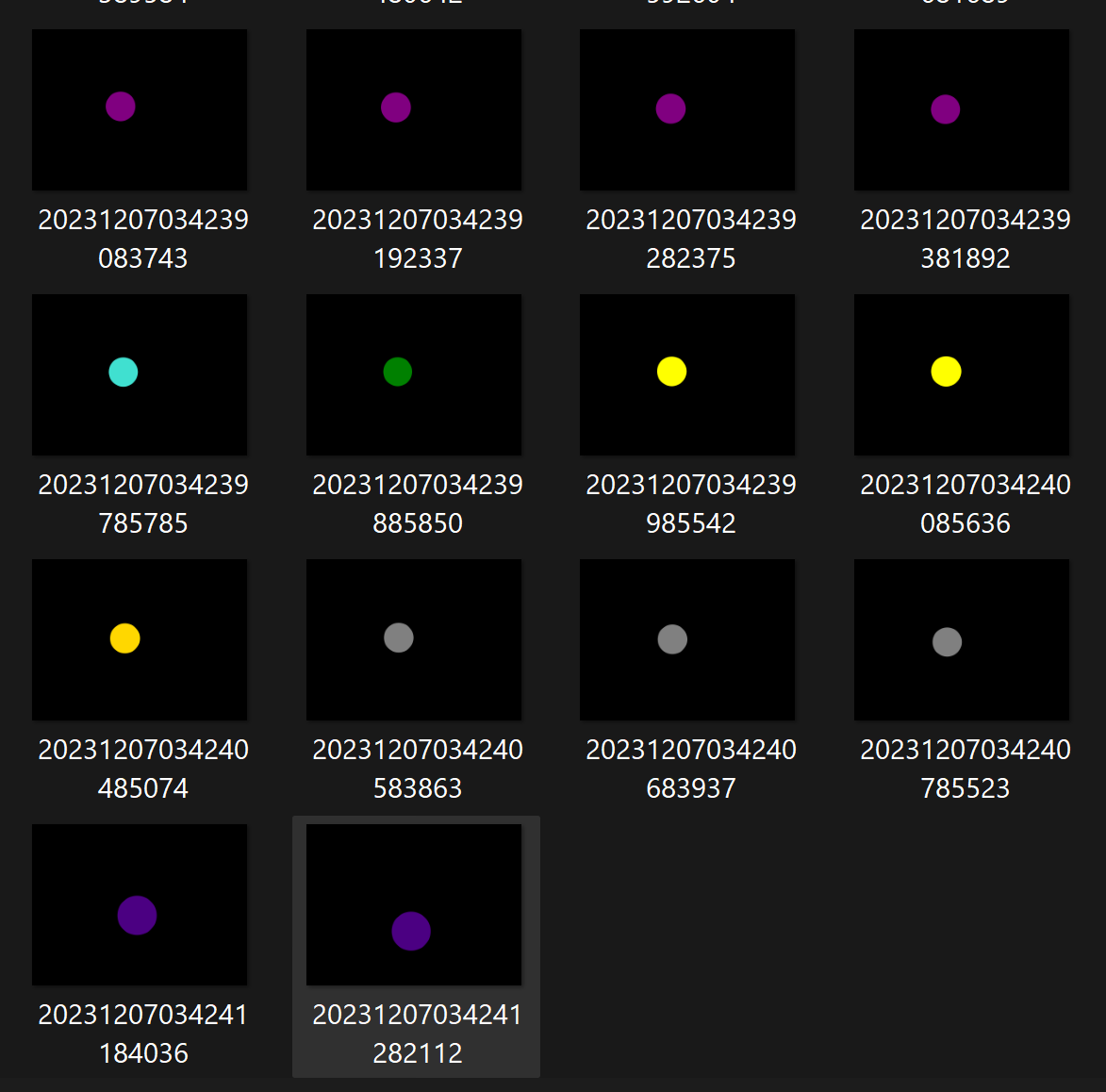

The second component feeds the screenshots (generated by the first component) along with a text prompt into ComfyUI (image generator) to generate and display an image onto a simple webUI at the rate of about 3 images per second.

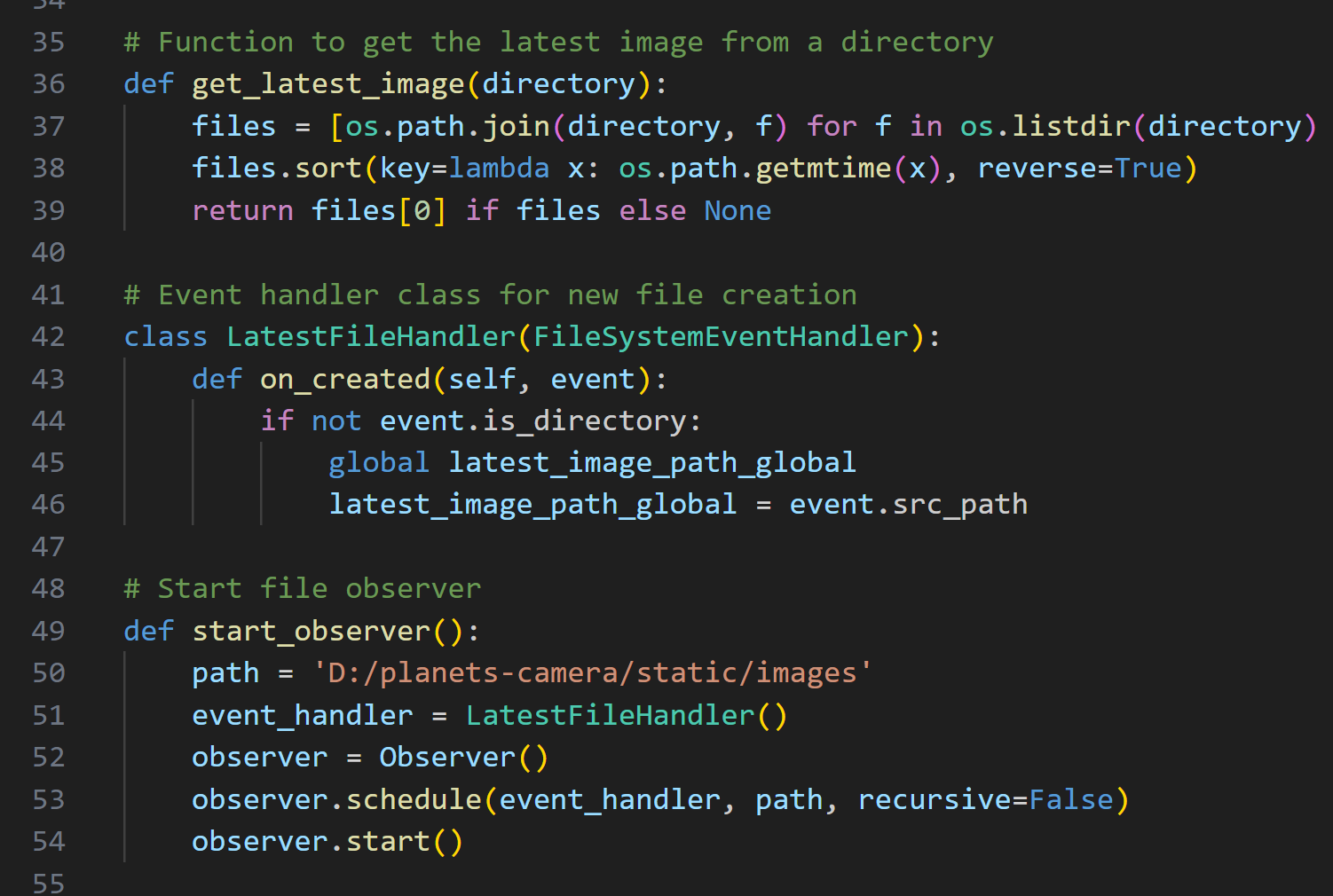

‘app.py’ monitors the screenshot-save directory on my PC for the newest file. We want the generated image to reflect the user’s real-time pose, so we need to send the latest screenshot to ComfyUI. I am familiar with monitoring folders for their latest file with Python, as I used this technique for my voice-to-notion app (April 2023).

Workflows

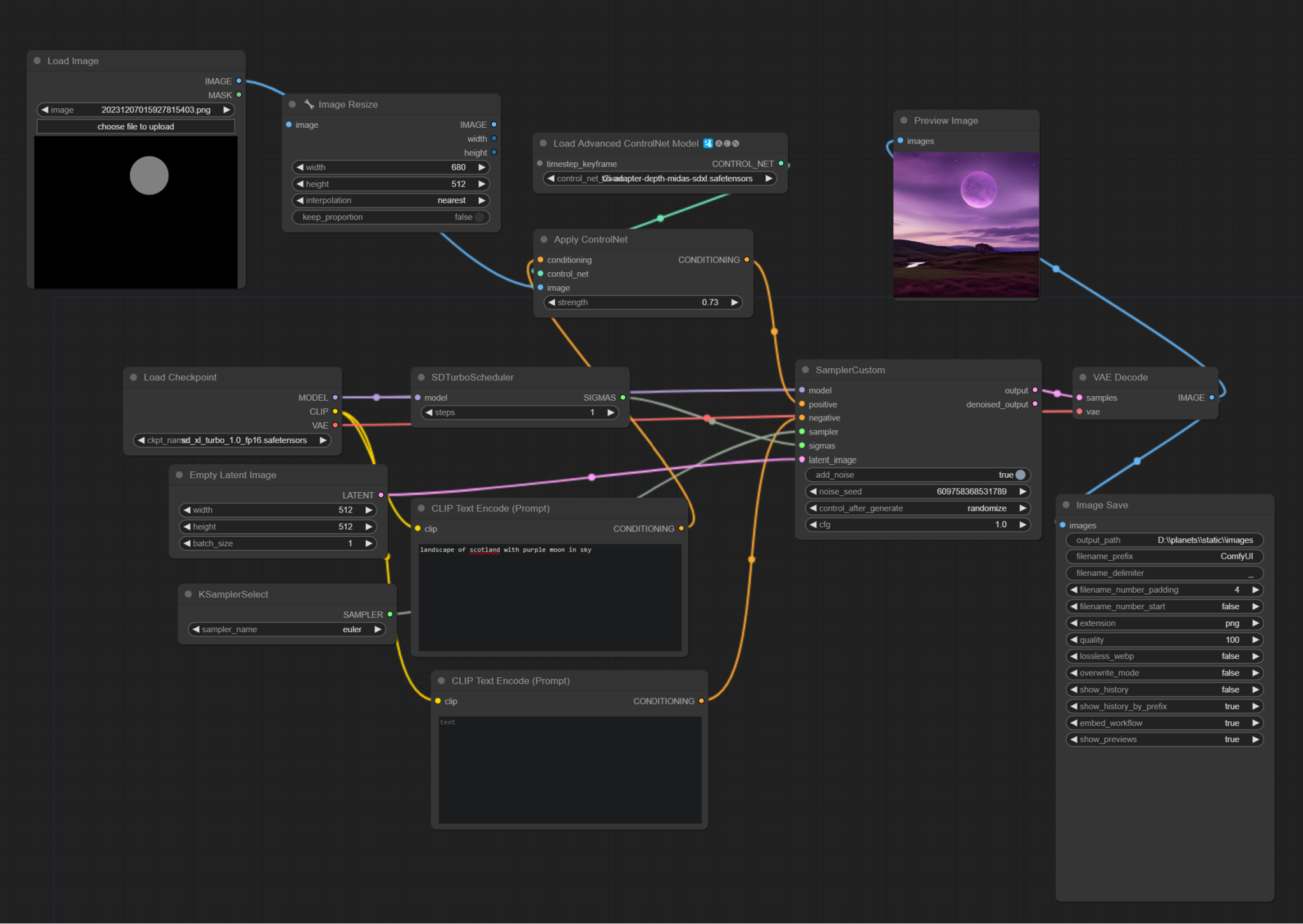

Workflows are a combination of JSON files made with ComfyUI’s point/click/drag user interface as well as the JavaScript overlay from ‘app_camera.py’ and ‘index-camera.html’. They are designed to work together. In this showcase example of a planet in the galaxy, ‘app_camera.py’ and ‘index-camera.html’ create circles that will represent planets, and the JSON file includes the text prompt and input image (screenshot).

To make the JSON file, we assemble our image generator by connecting nodes within ComfyUI. Once complete, we export the workflow as a JSON file which will be used by ‘app.py’.

first experiment

first experiment

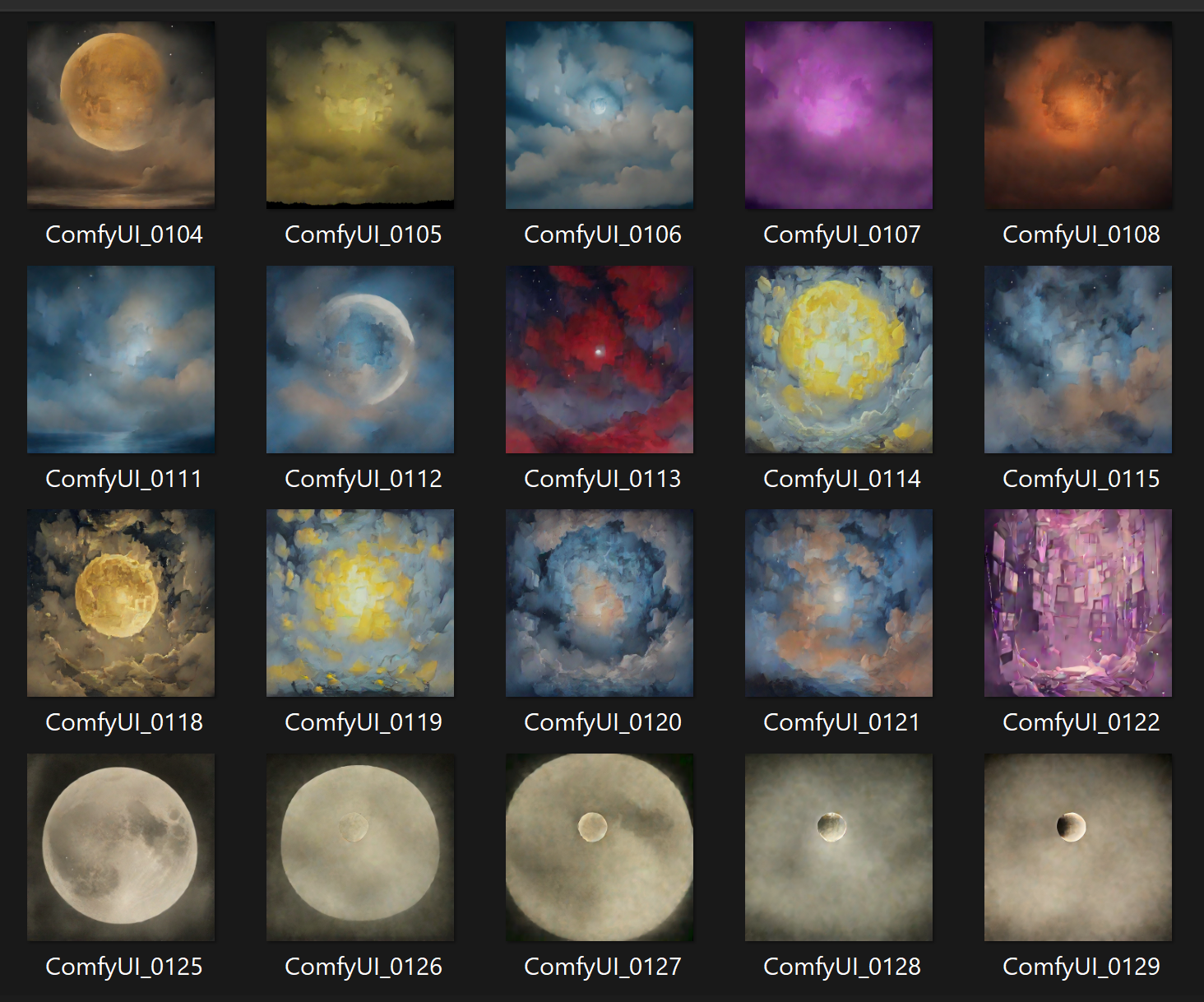

output images in directory

output images in directory

early results

early results

Workflow – Moon over horizon

Workflow – A being in the universe

Workflow – Two planets in a cloudy universe

Inspiration – release of SDXL Turbo

I was inspired and enabled to generate images in near-real-time by the release of SDXL Turbo (28 Nov, 2023), which is a fast text-to-image model compared to standard models (~300ms per generation vs. ~6 seconds). In reaction to the release of SDXL Turbo, my friends and I had a conversation about what would be faster than ‘text-to-image’, since generating at 3 images per second is faster than my ability to type a new input. Someone jokingly suggested “thought-to-image”. With this app using a version of ‘pose-to-image’, we’ve found some middle ground.

Experience gained

- VS Code – Managing project in coding software

- GitHub – Asked and received help from GitHub repository owner

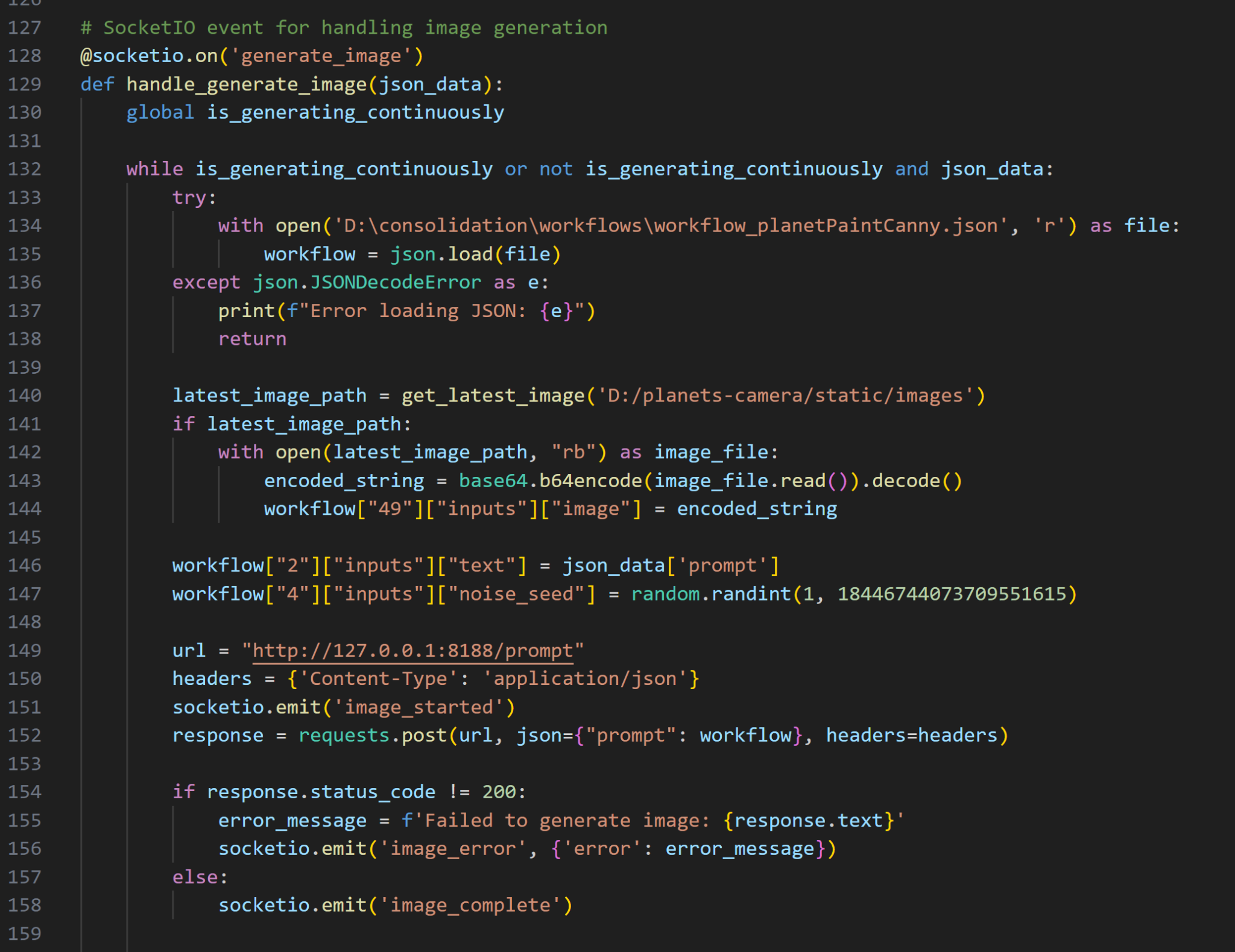

- Encoding – Learned about base64 image encoding

- ComfyUI – Learned to use ComfyUI in code! This is a personal achievement!

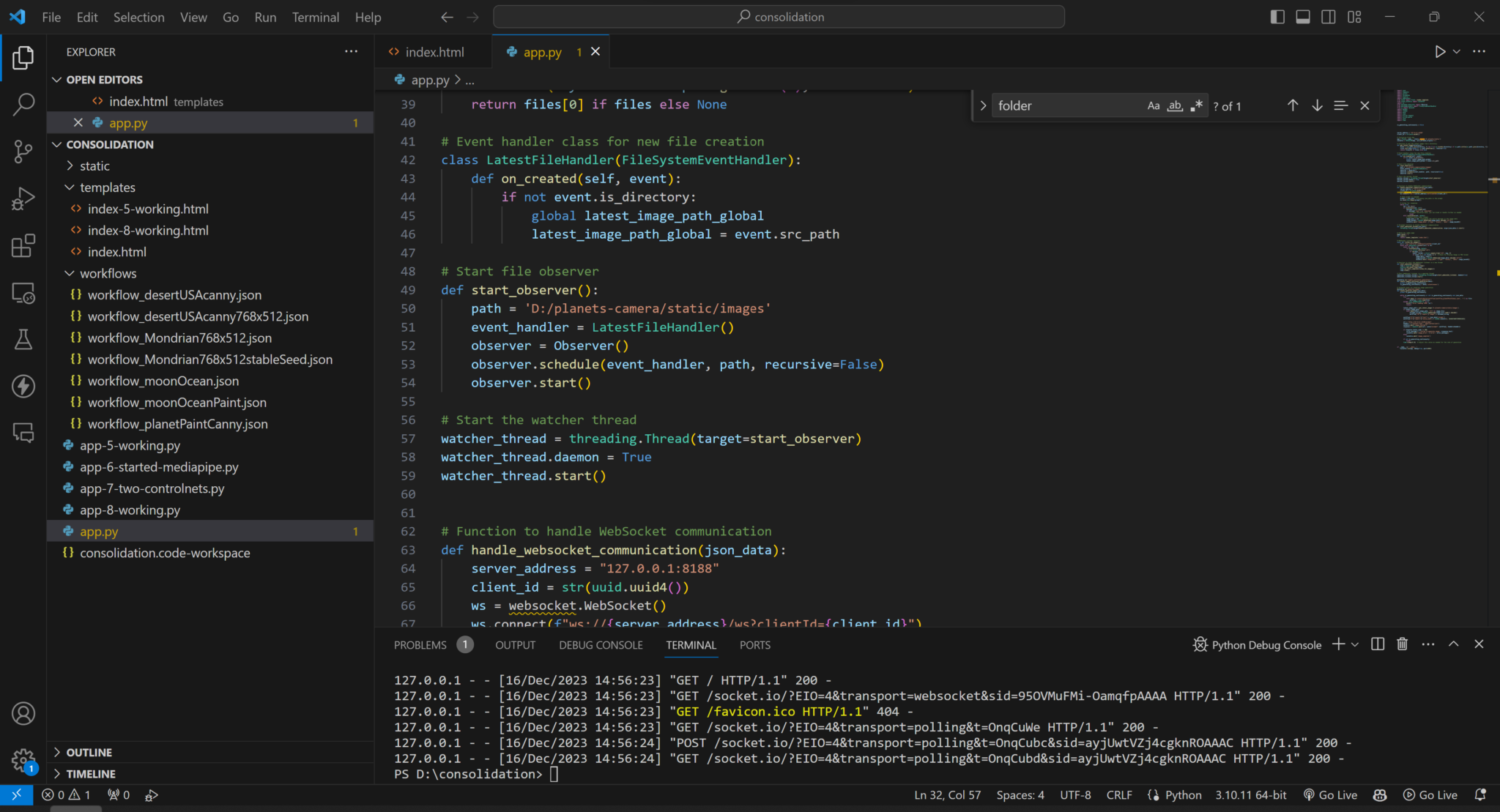

Microsoft VS Code, with built-in file explorer, built-in terminal for test-runs, and built-in AI assistant “Co-Pilot”

Microsoft VS Code, with built-in file explorer, built-in terminal for test-runs, and built-in AI assistant “Co-Pilot”

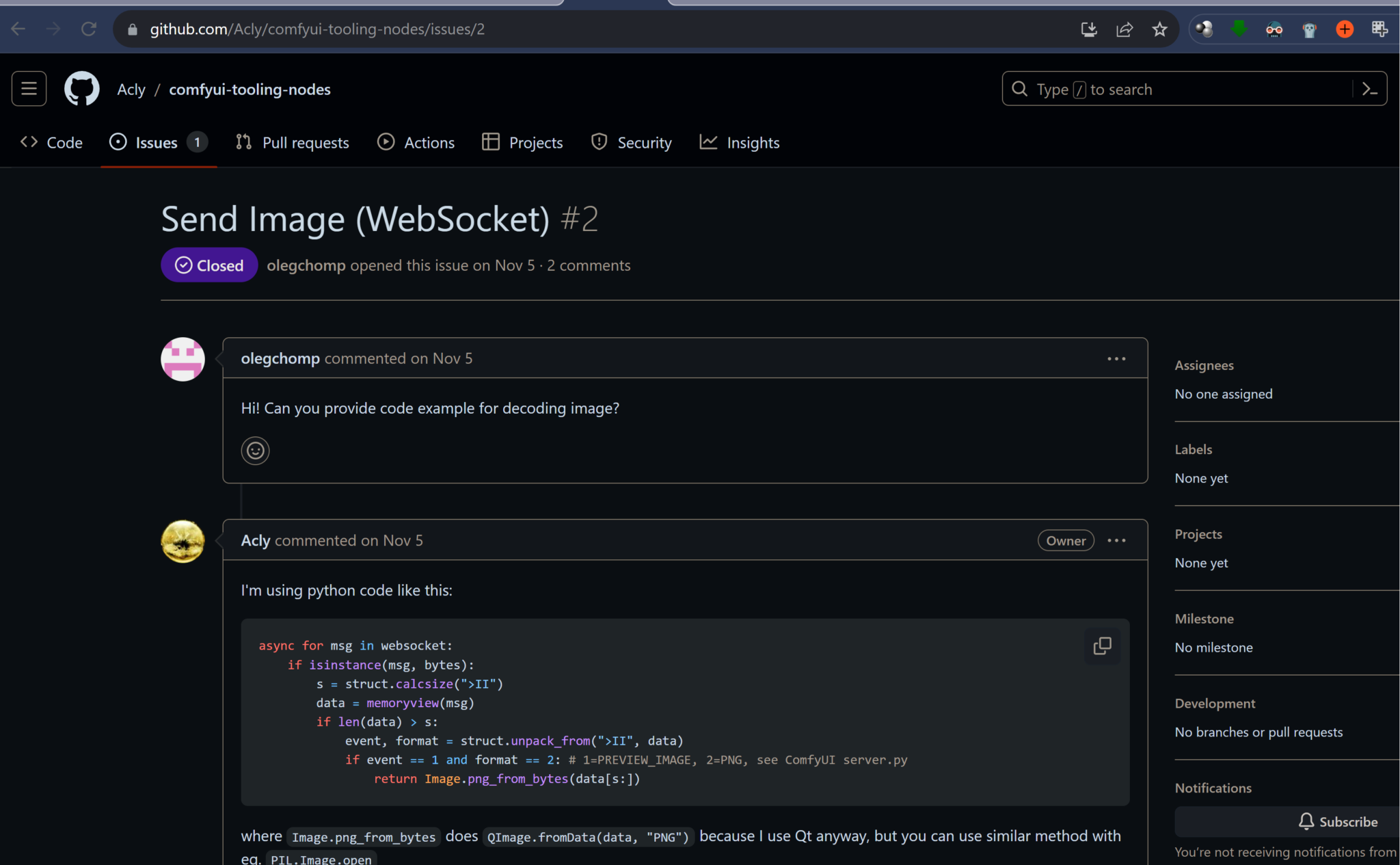

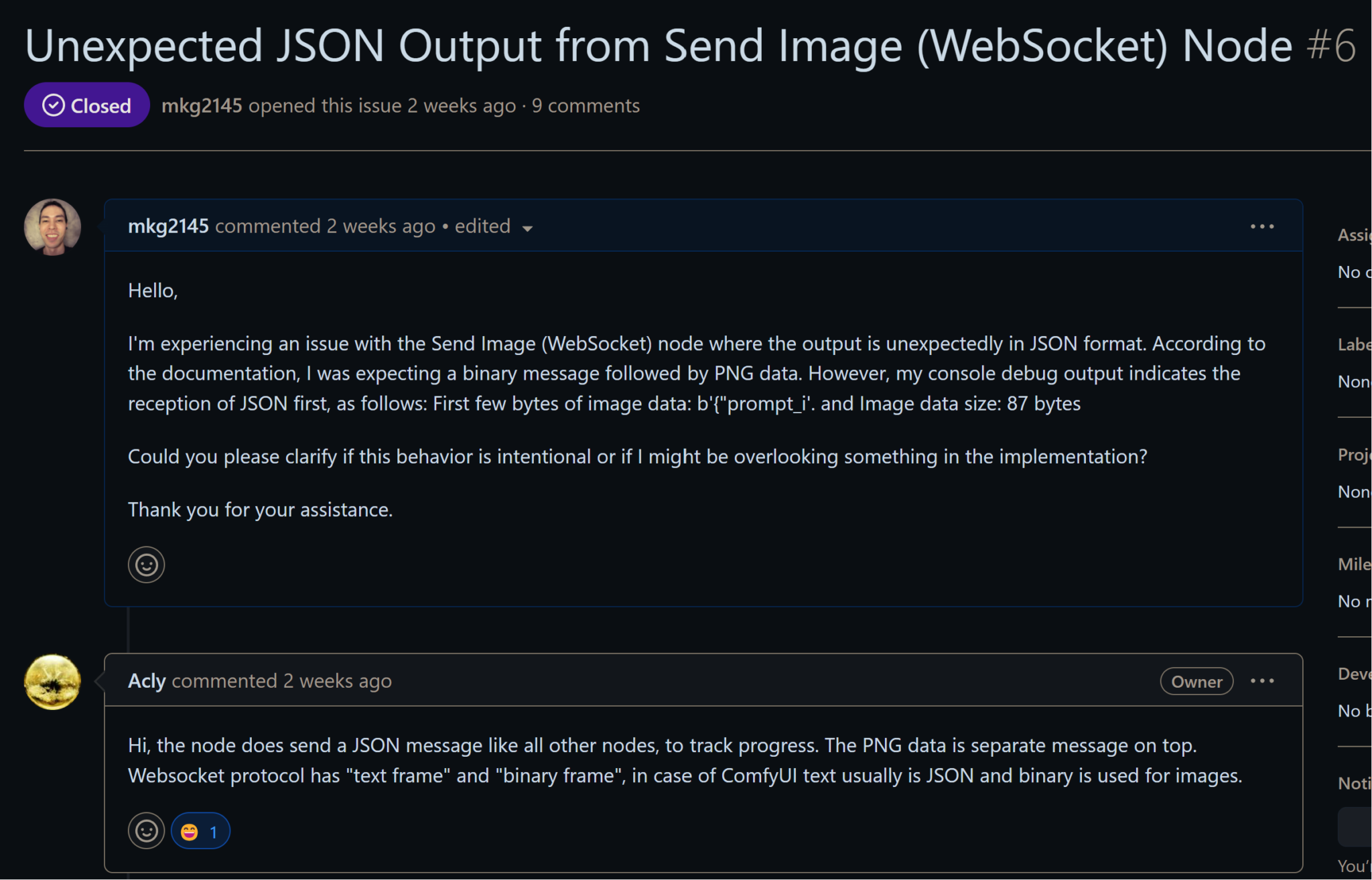

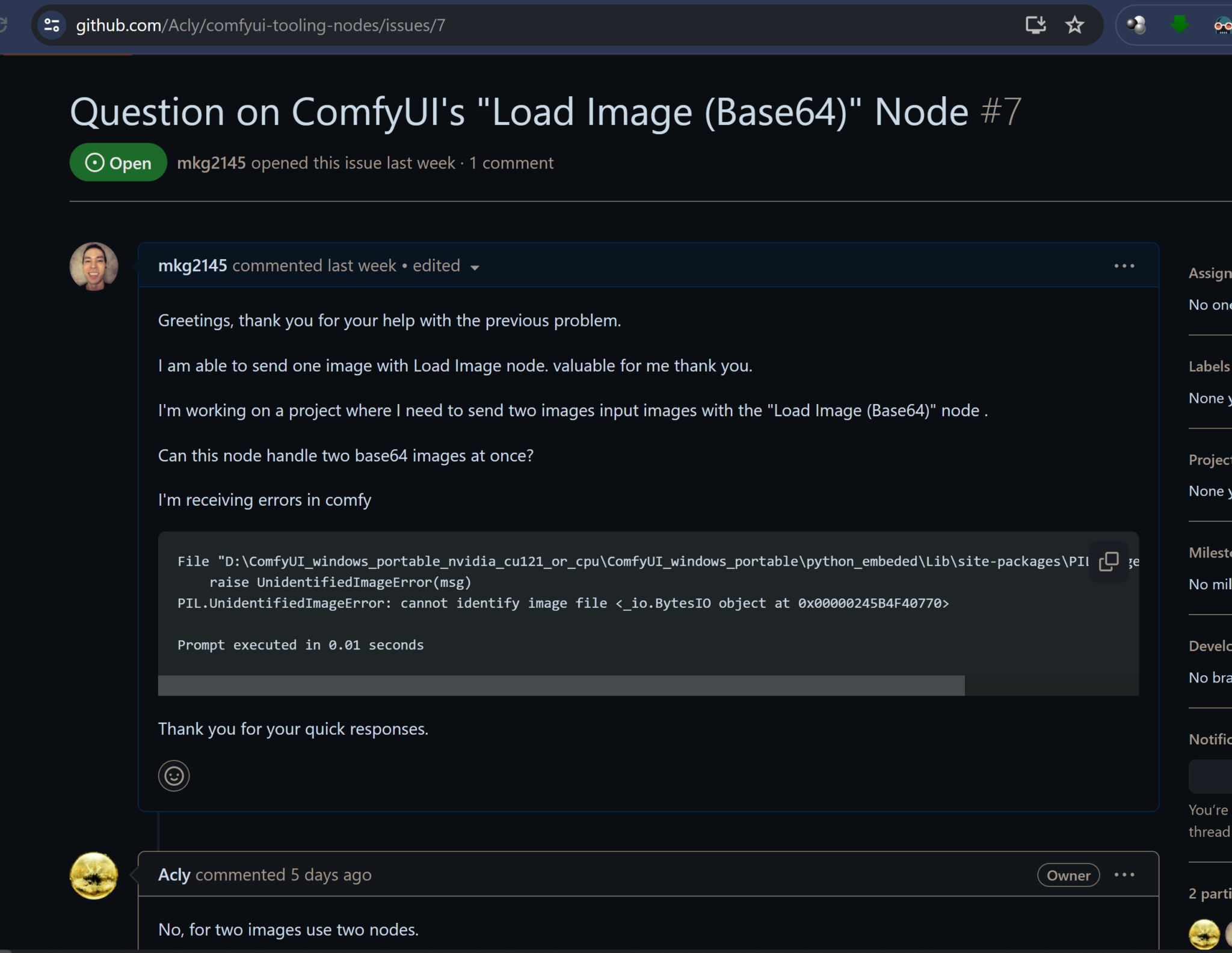

This GitHub ‘issues’ page helped me with the API. It explains sending and receiving images with ComfyUI. Sending requires encoding to base64 and receiving involves decoding the output image.

This GitHub ‘issues’ page helped me with the API. It explains sending and receiving images with ComfyUI. Sending requires encoding to base64 and receiving involves decoding the output image.

Issue #1: My first help request with the repository owner. I was not decoding the image from ComfyUI API properly.

Issue #1: My first help request with the repository owner. I was not decoding the image from ComfyUI API properly.

Issue #2: I was attempting to encode two images into one ComfyuI input node. Solution – each image gets its own node.

Issue #2: I was attempting to encode two images into one ComfyuI input node. Solution – each image gets its own node.

The most difficult part of the code: encoding image to base64 and sending to ComfyUI. I was stuck for days without progress.

The most difficult part of the code: encoding image to base64 and sending to ComfyUI. I was stuck for days without progress.

Monitoring a directory for the latest image. This is the same function I used my April 2023 application ‘voice-to-notion’ – posted on my blog and GitHub page (mkg2145)

Monitoring a directory for the latest image. This is the same function I used my April 2023 application ‘voice-to-notion’ – posted on my blog and GitHub page (mkg2145)

The user interface of ComfyUI. This is a visual representation of the JSON file (prompt) that ‘app.py’ sends to ComfyUI. I used two new nodes for this project: “load image Base-64” and “save image Base-64.

The user interface of ComfyUI. This is a visual representation of the JSON file (prompt) that ‘app.py’ sends to ComfyUI. I used two new nodes for this project: “load image Base-64” and “save image Base-64.

Testing workflows – adjusting settings, prompts, and input images and swapping nodes

Testing workflows – adjusting settings, prompts, and input images and swapping nodes

Demonstrations

Early test – ‘app_camera.py’

A professor using the app

Testing the app in class

Using the app with friends in Social Work library

Links

- ComfyUI – Image generator: https://github.com/comfyanonymous/ComfyUI

- SDXL Turbo install guide and demonstration: https://www.youtube.com/watch?v=e-dqCHaI4U8&t=84s&pp=ygUKc2R4bCB0dXJibw==

- GPT4 – Coding assistant: https://chat.openai.com/

- Visual Studio Code – Coding software with GitHub Copilot: https://code.visualstudio.com/

Scratched projects (projects started, but aborted)

Newsguesser – Game to guess the news. User is presented with an image of a story in the news and tries to guess the headline. The image presented is generated by image generator with input prompt as a headline and subheading of a news story. An AI compares the user’s guess and the actual headline and gives a score.

Rewind Time Machine – Revisit specific days in the past. User selects day on calendar. The UI shows